- A+

1 环境配置

1.1 设备列表

|

功能 |

主机名 |

IP |

|

mon |

node1 |

192.168.1.10 |

|

mon |

node2 |

192.168.1.11 |

|

mon |

node3 |

192.168.1.12 |

1.2 系统版本

[root@node1 ~]# cat /etc/redhat-release CentOS Linux release 7.5.1804 (Core) [root@node2 ~]# cat /etc/redhat-release CentOS Linux release 7.5.1804 (Core) [root@node3 ~]# cat /etc/redhat-release CentOS Linux release 7.5.1804 (Core)

1.3 配置yum源

这里使用的是阿里的yum源

wget -qO /etc/yum.repos.d/CentOS-Base.repo http://mirrors.aliyun.com/repo/Centos-7.repo wget -qO /etc/yum.repos.d/epel.repo http://mirrors.aliyun.com/repo/epel-7.repo

除了配置CentOS源和epel源,还需要添加ceph的源,这里也是使用的阿里的源

[root@node1 ~]# cat /etc/yum.repos.d/Aliyun-ceph.repo [ceph] name=ceph baseurl=https://mirrors.aliyun.com/ceph/rpm-jewel/el7/x86_64/ gpgcheck=0 [ceph-noarch] name=cephnoarch baseurl=https://mirrors.aliyun.com/ceph/rpm-jewel/el7/noarch/ gpgcheck=0

注:ceph源配置文件需要手动生成

2 部署mon

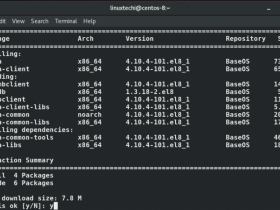

2.1 安装ceph软件包

在3个节点分别执行以下安装命令

[root@node1 ~]# yum -y install ceph ceph-radosgw [root@node2 ~]# yum -y install ceph ceph-radosgw [root@node3 ~]# yum -y install ceph ceph-radosgw

2.2 创建第一个mon节点

1、登录到node1,查看ceph目录是否已经生成

[root@node1 ~]# ls /etc/ceph/ rbdmap

2、生成ceph配置文件

[root@node1 ~]# touch /etc/ceph/ceph.conf

3、执行uuidgen命令,得到一个唯一的标识,作为ceph集群的ID

[root@node1 ~]# uuidgen bdfb36e0-23ed-4e2f-8bc6-b98d9fa9136c

4、配置ceph.conf

[root@node1 ~]# vim /etc/ceph/ceph.conf

[global] fsid = bdfb36e0-23ed-4e2f-8bc6-b98d9fa9136c #设置node1为mon节点 mon initial members = node1 #设置mon节点地址 mon host = 192.168.1.10 public network = 192.168.1.0/24 auth cluster required = cephx auth service required = cephx auth client required = cephx osd journal size = 1024 #设置副本数 osd pool default size = 3 #设置最小副本数 osd pool default min size = 1 osd pool default pg num = 256 osd pool default pgp num = 256 osd crush chooseleaf type = 1 osd_mkfs_type = xfs max mds = 5 mds max file size = 100000000000000 mds cache size = 1000000 #设置osd节点down后900s,把此osd节点逐出ceph集群,把之前映射到此节点的数据映射到其他节点。 mon osd down out interval = 900 [mon] #把时钟偏移设置成0.5s,默认是0.05s,由于ceph集群中存在异构PC,导致时钟偏移总是大于0.05s,为了方便同步直接把时钟偏移设置成0.5s mon clock drift allowed = .50

5、为监控节点创建管理密钥

[root@node1 ~]# ceph-authtool --create-keyring /tmp/ceph.mon.keyring --gen-key -n mon. --cap mon 'allow *' creating /tmp/ceph.mon.keyring

6、为ceph amin用户创建管理集群的密钥并赋予访问权限

[root@node1 ~]# sudo ceph-authtool --create-keyring /etc/ceph/ceph.client.admin.keyring --gen-key -n client.admin --set-uid=0 --cap mon 'allow *' --cap osd 'allow *' --cap mds 'allow *' --cap mgr 'allow *' creating /etc/ceph/ceph.client.admin.keyring

7、生成一个引导-osd密钥环,生成一个client.bootstrap-osd用户并将用户添加到密钥环中

[root@node1 ~]# sudo ceph-authtool --create-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring --gen-key -n client.bootstrap-osd --cap mon 'profile bootstrap-osd' creating /var/lib/ceph/bootstrap-osd/ceph.keyring

8、将生成的密钥添加到ceph.mon.keyring

[root@node1 ~]# sudo ceph-authtool /tmp/ceph.mon.keyring --import-keyring /etc/ceph/ceph.client.admin.keyring importing contents of /etc/ceph/ceph.client.admin.keyring into /tmp/ceph.mon.keyring [root@node1 ~]# sudo ceph-authtool /tmp/ceph.mon.keyring --import-keyring /var/lib/ceph/bootstrap-osd/ceph.keyring importing contents of /var/lib/ceph/bootstrap-osd/ceph.keyring into /tmp/ceph.mon.keyring

9、使用主机名、主机IP地址(ES)和FSID生成monmap。把它保存成/tmp/monmap

[root@node1 ~]# monmaptool --create --add node1 192.168.1.10 --fsid bdfb36e0-23ed-4e2f-8bc6-b98d9fa9136c /tmp/monmap monmaptool: monmap file /tmp/monmap monmaptool: set fsid to bdfb36e0-23ed-4e2f-8bc6-b98d9fa9136c monmaptool: writing epoch 0 to /tmp/monmap (1 monitors)

10、创建一个默认的数据目录

[root@node1 ~]# sudo -u ceph mkdir /var/lib/ceph/mon/ceph-node1

11、修改ceph.mon.keyring属主和属组为ceph

[root@node1 ~]# chown ceph.ceph /tmp/ceph.mon.keyring

12、初始化mon

root@node1 ~]# sudo -u ceph ceph-mon --mkfs -i node1 --monmap /tmp/monmap --keyring /tmp/ceph.mon.keyring ceph-mon: set fsid to bdfb36e0-23ed-4e2f-8bc6-b98d9fa9136c ceph-mon: created monfs at /var/lib/ceph/mon/ceph-node1 for mon.node1

13、为了防止重新被安装创建一个空的done文件

[root@node1 ~]# sudo touch /var/lib/ceph/mon/ceph-node1/done

14、启动mon

[root@node1 ~]# systemctl start ceph-mon@node1

15、查看运行状态

[root@node1 ~]# systemctl status ceph-mon@node1 ● ceph-mon@node1.service - Ceph cluster monitor daemon Loaded: loaded (/usr/lib/systemd/system/ceph-mon@.service; disabled; vendor preset: disabled) Active: active (running) since Fri 2018-06-29 13:36:27 CST; 5min ago Main PID: 1936 (ceph-mon)

16、设置mon开机自动启动

[root@node1 ~]# systemctl enable ceph-mon@node1 Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@node1.service to /usr/lib/systemd/system/ceph-mon@.service.

2.3 新增mon节点node2

1、把node1上生成的配置文件和密钥文件拷贝到node2

[root@node1 ~]# scp /etc/ceph/* root@node2:/etc/ceph/ [root@node1 ~]# scp /var/lib/ceph/bootstrap-osd/ceph.keyring root@node2:/var/lib/ceph/bootstrap-osd/ [root@node1 ~]# scp /tmp/ceph.mon.keyring root@node2:/tmp/ceph.mon.keyring

2、在node2上创建一个默认的数据目录

[root@node2 ~]# sudo -u ceph mkdir /var/lib/ceph/mon/ceph-node2

3、在node2上修改ceph.mon.keyring属主和属组为ceph

[root@node2 ~]# chown ceph.ceph /tmp/ceph.mon.keyring

4、获取密钥和monmap信息

[root@node2 ~]# ceph auth get mon. -o /tmp/ceph.mon.keyring exported keyring for mon. [root@node2 ~]# ceph mon getmap -o /tmp/ceph.mon.map got monmap epoch 1

5、初始化mon

[root@node2 ~]# sudo -u ceph ceph-mon --mkfs -i node2 --monmap /tmp/ceph.mon.map --keyring /tmp/ceph.mon.keyring ceph-mon: set fsid to 8ca723b0-c350-4807-9c2a-ad6c442616aa ceph-mon: created monfs at /var/lib/ceph/mon/ceph-node2 for mon.node2

6、为了防止重新被安装创建一个空的done文件

[root@node2 ~]# sudo touch /var/lib/ceph/mon/ceph-node2/done

7、将新的mon节点添加至ceph集群的mon列表

[root@node2 ~]# ceph mon add node2 192.168.1.11:6789 adding mon.node2 at 192.168.1.11:6789/0

8、启动新添加的mon

[root@node2 ~]# systemctl start ceph-mon@node2 [root@node2 ~]# systemctl status ceph-mon@node2 ● ceph-mon@node2.service - Ceph cluster monitor daemon Loaded: loaded (/usr/lib/systemd/system/ceph-mon@.service; disabled; vendor preset: disabled) Active: active (running) since Sat 2018-06-30 10:58:52 CST; 6s ago Main PID: 1555 (ceph-mon)

9、设置mon开机自动启动

[root@node2 ~]# systemctl enable ceph-mon@node2 Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@node2.service to /usr/lib/systemd/system/ceph-mon@.service.

2.4 新增mon节点node3

1、把node1上生成的配置文件和密钥文件拷贝到node3

[root@node1 ~]# scp /etc/ceph/* root@node3:/etc/ceph/ [root@node1 ~]# scp /var/lib/ceph/bootstrap-osd/ceph.keyring root@node3:/var/lib/ [root@node1 ~]# scp /tmp/ceph.mon.keyring root@node3:/tmp/ceph.mon.keyring

2、在node3上创建一个默认的数据目录

[root@node3 ~]# sudo -u ceph mkdir /var/lib/ceph/mon/ceph-node3

3、在node2上修改ceph.mon.keyring属主和属组为ceph

[root@node3 ~]# chown ceph.ceph /tmp/ceph.mon.keyring

4、获取密钥和monmap信息

[root@node3 ~]# ceph auth get mon. -o /tmp/ceph.mon.keyring exported keyring for mon. [root@node3 ~]# ceph mon getmap -o /tmp/ceph.mon.map got monmap epoch 1

5、初始化mon

[root@node3 ~]# sudo -u ceph ceph-mon --mkfs -i node3 --monmap /tmp/ceph.mon.map --keyring /tmp/ceph.mon.keyring ceph-mon: set fsid to 8ca723b0-c350-4807-9c2a-ad6c442616aa ceph-mon: created monfs at /var/lib/ceph/mon/ceph-node3 for mon.node3

6、为了防止重新被安装创建一个空的done文件

[root@node3 ~]# sudo touch /var/lib/ceph/mon/ceph-node3/done

7、将新的mon节点添加至ceph集群的mon列表

[root@node3 ~]# ceph mon add node3 192.168.1.12:6789 adding mon.node3 at 192.168.1.12:6789/0

8、启动新添加的mon

[root@node3 ~]# systemctl start ceph-mon@node3 [root@node3 ~]# systemctl status ceph-mon@node3 ● ceph-mon@node3.service - Ceph cluster monitor daemon Loaded: loaded (/usr/lib/systemd/system/ceph-mon@.service; disabled; vendor preset: disabled) Active: active (running) since Sat 2018-06-30 11:16:00 CST; 4s ago Main PID: 1594 (ceph-mon)、

9、设置mon开机自动启动

[root@node3 ~]# systemctl enable ceph-mon@node3 Created symlink from /etc/systemd/system/ceph-mon.target.wants/ceph-mon@node3.service to /usr/lib/systemd/system/ceph-mon@.service.

3个mon创建完成后可以通过ceph -s查看集群状态

[root@node1 ~]# ceph -s

cluster 8ca723b0-c350-4807-9c2a-ad6c442616aa

health HEALTH_ERR

64 pgs are stuck inactive for more than 300 seconds

64 pgs stuck inactive

64 pgs stuck unclean

no osds

monmap e3: 3 mons at {node1=192.168.1.10:6789/0,node2=192.168.1.11:6789/0,node3=192.168.1.12:6789/0}

election epoch 12, quorum 0,1,2 node1,node2,node3

osdmap e1: 0 osds: 0 up, 0 in

flags sortbitwise,require_jewel_osds

pgmap v2: 64 pgs, 1 pools, 0 bytes data, 0 objects

0 kB used, 0 kB / 0 kB avail

64 creating

注:当前状态中的error是由于还没有添加osd

3 部署osd

1、添加osd之前现在crush图中创建3个名称分别为node1,node2,node3的桶

[root@node1 ~]# ceph osd crush add-bucket node1 host added bucket node1 type host to crush map [root@node1 ~]# ceph osd crush add-bucket node2 host added bucket node2 type host to crush map [root@node1 ~]# ceph osd crush add-bucket node3 host added bucket node3 type host to crush map

2、把3个新添加的桶移动到默认的root下

[root@node1 ~]# ceph osd crush move node1 root=default

moved item id -2 name 'node1' to location {root=default} in crush map

[root@node1 ~]# ceph osd crush move node2 root=default

moved item id -3 name 'node2' to location {root=default} in crush map

[root@node1 ~]# ceph osd crush move node3 root=default

moved item id -4 name 'node3' to location {root=default} in crush map

3.1 创建第一个osd

1、创建osd

[root@node1 ~]# ceph osd create 0

注:0位osd的ID号,默认情况下会自动递增

2、准备磁盘

通过ceph-disk命令可以自动根据ceph.conf文件中的配置信息对磁盘进行分区

[root@node1 ~]# ceph-disk prepare /dev/sdb Creating new GPT entries. The operation has completed successfully. The operation has completed successfully. meta-data=/dev/sdb1 isize=2048 agcount=4, agsize=1245119 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=4980475, imaxpct=25 = sunit=0 swidth=0 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=0 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0 The operation has completed successfully.

3、对第一个分区进行格式化

[root@node1 ~]# mkfs.xfs -f /dev/sdb1 meta-data=/dev/sdb1 isize=512 agcount=4, agsize=1245119 blks = sectsz=512 attr=2, projid32bit=1 = crc=1 finobt=0, sparse=0 data = bsize=4096 blocks=4980475, imaxpct=25 = sunit=0 swidth=0 blks naming =version 2 bsize=4096 ascii-ci=0 ftype=1 log =internal log bsize=4096 blocks=2560, version=2 = sectsz=512 sunit=0 blks, lazy-count=1 realtime =none extsz=4096 blocks=0, rtextents=0

4、创建osd默认的数据目录

[root@node1 ~]# mkdir -p /var/lib/ceph/osd/ceph-0

注:目录格式为ceph-$ID,第一步创建出的osd的ID为0,所以目录为ceph-0

5、对分区进行挂载

[root@node1 ~]# mount /dev/sdc1 /var/lib/ceph/osd/ceph-0/

6、添加自动挂载信息

[root@node1 ~]# echo "/dev/sdb1 /var/lib/ceph/osd/ceph-1 xfs defaults 0 0" >> /etc/fstab

7、初始化 OSD 数据目录

[root@node1 ~]# ceph-osd -i 0 --mkfs --mkkey 2018-06-30 11:31:19.791042 7f7cbd911880 -1 journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aio anyway 2018-06-30 11:31:19.808367 7f7cbd911880 -1 journal FileJournal::_open: disabling aio for non-block journal. Use journal_force_aio to force use of aio anyway 2018-06-30 11:31:19.814628 7f7cbd911880 -1 filestore(/var/lib/ceph/osd/ceph-0) could not find #-1:7b3f43c4:::osd_superblock:0# in index: (2) No such file or directory 2018-06-30 11:31:19.875860 7f7cbd911880 -1 created object store /var/lib/ceph/osd/ceph-0 for osd.0 fsid 8ca723b0-c350-4807-9c2a-ad6c442616aa 2018-06-30 11:31:19.875985 7f7cbd911880 -1 auth: error reading file: /var/lib/ceph/osd/ceph-0/keyring: can't open /var/lib/ceph/osd/ceph-0/keyring: (2) No such file or directory 2018-06-30 11:31:19.876241 7f7cbd911880 -1 created new key in keyring /var/lib/ceph/osd/ceph-0/keyring

8、添加key

[root@node1 ~]# ceph auth add osd.0 osd 'allow *' mon 'allow profile osd' -i /var/lib/ceph/osd/ceph-0/keyring added key for osd.0

9、把新建的osd添加到crush中

[root@node1 ~]# ceph osd crush add osd.0 1.0 host=node1

add item id 0 name 'osd.0' weight 1 at location {host=node1} to crush map

10、修改osd数据目录的属主和属组为ceph

[root@node1 ~]# chown -R ceph:ceph /var/lib/ceph/osd/ceph-0/

11、启动新添加的osd

[root@node1 ~]# systemctl start ceph-osd@0

[root@node1 ~]# systemctl status ceph-osd@0

● ceph-osd@0.service - Ceph object storage daemon

Loaded: loaded (/usr/lib/systemd/system/ceph-osd@.service; disabled; vendor preset: disabled)

Active: active (running) since Sat 2018-06-30 11:32:58 CST; 4s ago

Process: 3408 ExecStartPre=/usr/lib/ceph/ceph-osd-prestart.sh --cluster ${CLUSTER} --id %i (code=exited, status=0/SUCCESS)

Main PID: 3459 (ceph-osd)

12、设置osd开机自动启动

[root@node1 ~]# systemctl enable ceph-osd@0 Created symlink from /etc/systemd/system/ceph-osd.target.wants/ceph-osd@0.service to /usr/lib/systemd/system/ceph-osd@.service.

13、查看ceph osd tree状态

[root@node1 ~]# ceph osd tree ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY -1 1.00000 root default -2 1.00000 host node1 0 1.00000 osd.0 up 1.00000 1.00000 -3 0 host node2 -4 0 host node3

3.2 添加新的osd

和添加第一个osd的方法一样,这里写了个简单的添加脚本,可以通过脚本快速进行一下添加

[root@node1 ~]# sh osd.sh Select the disk: sdc Select the host: node1 Cleanup OSD ID is done. Directory is done. Prepare OSD Disk is done. Add OSD is done

脚本内容

#!/bin/bash read -p "Select the disk: " DISK read -p "Select the host: " HOST ##### Cleanup OSD ID ##### for i in $(ceph osd dump | grep new | awk '{print $1}' | awk -F"." '{print $2}') do ceph osd rm $i ceph auth del osd.$i done echo -e "\033[40;32mCleanup OSD ID is done.\033[0m" ID=$(ceph osd create) DIRECTORY=/var/lib/ceph/osd/ceph-$ID ##### Create OSD Directory ##### if [ -d $DIRECTORY ] ;then rm -rf $DIRECTORY fi mkdir -p $DIRECTORY if [ $? = 0 ] ; then echo -e "\033[40;32mDirectory is done.\033[0m" else break fi ##### Prepare OSD Disk ##### blkid | grep $DISK | grep ceph &> /dev/zero if [ $? != 0 ] ; then ceph-disk zap /dev/$DISK &> /dev/zero ceph-disk prepare /dev/$DISK &> /dev/zero else continue fi PARTITION=/dev/${DISK}1 mkfs.xfs -f $PARTITION &> /dev/zero echo "$PARTITION $DIRECTORY xfs rw,noatime,attr2,inode64,noquota 0 0" >> /etc/fstab mount -o rw,noatime,attr2,inode64,noquota $PARTITION $DIRECTORY if [ $? = 0 ] ; then echo -e "\033[40;32mPrepare OSD Disk is done.\033[0m" else break fi ##### Add OSD ##### ceph-osd -i $ID --mkfs --mkkey &> /dev/zero ceph auth add osd.$ID osd 'allow *' mon 'allow profile osd' -i $DIRECTORY/keyring &> /dev/zero ceph osd crush add osd.$ID 1.0 host=$HOST &> /dev/zero chown -R ceph:ceph /var/lib/ceph/osd systemctl start ceph-osd@$ID &> /dev/zero systemctl enable ceph-osd@$ID &> /dev/zero systemctl status ceph-osd@$ID &> /dev/zero if [ $? = 0 ] ; then echo -e "\033[40;32mAdd OSD is done\033[0m" fi

osd添加完成后查看ceph osd tree 状态

[root@node1 ~]# ceph osd tree ID WEIGHT TYPE NAME UP/DOWN REWEIGHT PRIMARY-AFFINITY -1 9.00000 root default -2 3.00000 host node1 6 1.00000 osd.6 up 1.00000 1.00000 1 1.00000 osd.1 up 1.00000 1.00000 4 1.00000 osd.4 up 1.00000 1.00000 -3 3.00000 host node2 7 1.00000 osd.7 up 1.00000 1.00000 2 1.00000 osd.2 up 1.00000 1.00000 5 1.00000 osd.5 up 1.00000 1.00000 -4 3.00000 host node3 0 1.00000 osd.0 up 1.00000 1.00000 3 1.00000 osd.3 up 1.00000 1.00000 8 1.00000 osd.8 up 1.00000 1.00000

4 状态修复

4.1 too few PGs per OSD

1、通过ceph -s查看状态

[root@node1 ~]# ceph -s

cluster ffcb01ea-e7e3-4097-8551-dde0256f610a

health HEALTH_WARN

too few PGs per OSD (21 < min 30)

monmap e3: 3 mons at {node1=192.168.1.10:6789/0,node2=192.168.1.11:6789/0,node3=192.168.1.12:6789/0

election epoch 8, quorum 0,1,2 node1,node2,node3

osdmap e52: 9 osds: 9 up, 9 in

flags sortbitwise,require_jewel_osds

pgmap v142: 64 pgs, 1 pools, 0 bytes data, 0 objects

9519 MB used, 161 GB / 170 GB avail

64 active+clean

HEALTH_WARN 提示PG太小

PG计算方式

total PGs = ((Total_number_of_OSD * 100) / max_replication_count) / pool_count

当前ceph集群是9个osd,3副本,1个默认的rbd pool

所以PG计算结果为300,一般把这个值设置为与计算结果最接近的2的幂数,跟300比较接近的是256

2、查看当前的PG值

[root@node1 ~]# ceph osd pool get rbd pg_num pg_num: 64 [root@node1 ~]# ceph osd pool get rbd pgp_num pgp_num: 64

3、手动设置

[root@node1 ~]# ceph osd pool set rbd pg_num 256 set pool 0 pg_num to 256 [root@node1 ~]# ceph osd pool set rbd pgp_num 256 set pool 0 pgp_num to 256

4、再次查看状态

[root@node1 ~]# ceph -s

cluster ffcb01ea-e7e3-4097-8551-dde0256f610a

health HEALTH_WARN

clock skew detected on mon.node2, mon.node3

Monitor clock skew detected

monmap e3: 3 mons at {node1=192.168.1.10:6789/0,node2=192.168.1.11:6789/0,node3=192.168.1.12:6789/0}

election epoch 16, quorum 0,1,2 node1,node2,node3

osdmap e56: 9 osds: 9 up, 9 in

flags sortbitwise,require_jewel_osds

pgmap v160: 256 pgs, 1 pools, 0 bytes data, 0 objects

9527 MB used, 161 GB / 170 GB avail

256 active+clean

4.2 Monitor clock skew detected

在上个查看结果中出现了一个新的warn,这个一般是由于mon节点的时间偏差比较大,可以修改ceph.conf中的时间偏差值参数来进行修复

修改结果:

[mon] mon clock drift allowed = 2 mon clock drift warn backoff = 30

再次查看状态

[root@node1 ~]# ceph -s

cluster ffcb01ea-e7e3-4097-8551-dde0256f610a

health HEALTH_OK

monmap e3: 3 mons at {node1=192.168.1.10:6789/0,node2=192.168.1.11:6789/0,node3=192.168.1.12:6789/0}

election epoch 22, quorum 0,1,2 node1,node2,node3

osdmap e56: 9 osds: 9 up, 9 in

flags sortbitwise,require_jewel_osds

pgmap v160: 256 pgs, 1 pools, 0 bytes data, 0 objects

9527 MB used, 161 GB / 170 GB avail

256 active+clean

注:生产环境中可以通过配置时间同步解决此状况

5 部署mds

5.1 创建第一个mds

1、为mds元数据服务器创建一个目录

[root@node1 ~]# mkdir -p /var/lib/ceph/mds/ceph-node1

2、为bootstrap-mds客户端创建一个密钥 注:(如果下面的密钥在目录里已生成可以省略此步骤)

[root@node1 ~]# ceph-authtool --create-keyring /var/lib/ceph/bootstrap-mds/ceph.keyring --gen-key -n client.bootstrap-mds creating /var/lib/ceph/bootstrap-mds/ceph.keyring

3、在ceph auth库中创建bootstrap-mds客户端,赋予权限添加之前创建的密钥 注(查看ceph auth list 用户权限认证列表 如果已有client.bootstrap-mds此用户,此步骤可以省略)

[root@node1 ~]# ceph auth add client.bootstrap-mds mon 'allow profile bootstrap-mds' -i /var/lib/ceph/bootstrap-mds/ceph.keyring

4、在root家目录里创建ceph.bootstrap-mds.keyring文件

[root@node1 ~]# touch /root/ceph.bootstrap-mds.keyring

5、把keyring /var/lib/ceph/bootstrap-mds/ceph.keyring里的密钥导入家目录下的ceph.bootstrap-mds.keyring文件里

[root@node1 ~]# ceph-authtool --import-keyring /var/lib/ceph/bootstrap-mds/ceph.keyring ceph.bootstrap-mds.keyring importing contents of /var/lib/ceph/bootstrap-mds/ceph.keyring into ceph.bootstrap-mds.keyring

6、在ceph auth库中创建mds.node1用户,并赋予权限和创建密钥,密钥保存在/var/lib/ceph/mds/ceph-node1/keyring文件里

[root@node1 ~]# ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.node1 osd 'allow rwx' mds 'allow' mon 'allow profile mds' -o /var/lib/ceph/mds/ceph-node1/keyring

7、启动mds

[root@node1 ~]# systemctl start ceph-mds@node1 [root@node1 ~]# systemctl status ceph-mds@node1 ● ceph-mds@node1.service - Ceph metadata server daemon Loaded: loaded (/usr/lib/systemd/system/ceph-mds@.service; disabled; vendor preset: disabled) Active: active (running) since Mon 2018-07-02 10:54:17 CST; 5s ago Main PID: 18319 (ceph-mds)

8、设置mds开机自动启动

[root@node1 ~]# systemctl enable ceph-mds@node1 Created symlink from /etc/systemd/system/ceph-mds.target.wants/ceph-mds@node1.service to /usr/lib/systemd/system/ceph-mds@.service.

5.2 添加第二个mds

[root@node1 ~]# scp ceph.bootstrap-mds.keyring node2:/root/ceph.bootstrap-mds.keyring [root@node1 ~]# scp /var/lib/ceph/bootstrap-mds/ceph.keyring node2:/var/lib/ceph/bootstrap-mds/ceph.keyring

2、在node2上创建mds元数据目录

[root@node2 ~]# mkdir -p /var/lib/ceph/mds/ceph-node2

3、在ceph auth库中创建mds.node1用户,并赋予权限和创建密钥,密钥保存在/var/lib/ceph/mds/ceph-node2/keyring文件里

[root@node2 ~]# ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.node2 osd 'allow rwx' mds 'allow' mon 'allow profile mds' -o /var/lib/ceph/mds/ceph-node2/keyring

4、启动mds

[root@node2 ~]# systemctl start ceph-mds@node2 [root@node2 ~]# systemctl status ceph-mds@node2 ● ceph-mds@node2.service - Ceph metadata server daemon Loaded: loaded (/usr/lib/systemd/system/ceph-mds@.service; disabled; vendor preset: disabled) Active: active (running) since Mon 2018-07-02 11:21:09 CST; 3s ago Main PID: 14164 (ceph-mds)

5、设置mds开机自动启动

[root@node2 ~]# systemctl enable ceph-mds@node2 Created symlink from /etc/systemd/system/ceph-mds.target.wants/ceph-mds@node2.service to /usr/lib/systemd/system/ceph-mds@.service.

5.3 添加第三个mds

1、拷贝密钥文件到node3

[root@node1 ~]# scp ceph.bootstrap-mds.keyring node3:/root/ceph.bootstrap-mds.keyring [root@node1 ~]# scp /var/lib/ceph/bootstrap-mds/ceph.keyring node3:/var/lib/ceph/bootstrap-mds/ceph.keyring

2、在node3上创建mds元数据目录

[root@node3 ~]# mkdir -p /var/lib/ceph/mds/ceph-node3

3、在ceph auth库中创建mds.node1用户,并赋予权限和创建密钥,密钥保存在/var/lib/ceph/mds/ceph-node2/keyring文件里

[root@node3 ~]# ceph --cluster ceph --name client.bootstrap-mds --keyring /var/lib/ceph/bootstrap-mds/ceph.keyring auth get-or-create mds.node3 osd 'allow rwx' mds 'allow' mon 'allow profile mds' -o /var/lib/ceph/mds/ceph-node3/keyring

4、启动mds

[root@node3 ~]# systemctl restart ceph-mds@node3 [root@node3 ~]# systemctl status ceph-mds@node3 ● ceph-mds@node3.service - Ceph metadata server daemon Loaded: loaded (/usr/lib/systemd/system/ceph-mds@.service; disabled; vendor preset: disabled) Active: active (running) since Mon 2018-07-02 11:11:41 CST; 15min ago Main PID: 31940 (ceph-mds)

5、设置mds开机自动启动

[root@node3 ~]# systemctl enable ceph-mds@node3 Created symlink from /etc/systemd/system/ceph-mds.target.wants/ceph-mds@node3.service to /usr/lib/systemd/system/ceph-mds@.service.

- 安卓客户端下载

- 微信扫一扫

-

- 微信公众号

- 微信公众号扫一扫

-